Title: Quasar - Knowledge Base

Preface

This document contains specialized Quasar topics as well as additional information that can be useful next to the Quick Reference Manual.

From time to time, I will add a number of generally unrelated topics here, with the goal to later integrate them in the Quick Reference Manual.

The pages of the wiki are written with the program markpad. I managed to compile the wiki with PDFLaTeX (using the handy tool pandoc), so the PDF of the wiki will also be enclosed in the Quasar GIT Distribution. As a result of the conversion, the PDF hyperlinks currently do not work.

Warning for interpreted for-loops

The Quasar compiler generates warnings when a loop fails to parallelize (or serialize), like:

For-loop will be interpreted. This may cause performance degradation.

In case no matrices are created inside the for-loop, you may consider

automatic for-loop parallelization/serialization (which is now turned off).Consider the following example:

im = imread("/u/kdouterl/Desktop/Quasar test/image.png")

im_out = zeros(size(im))

gamma = 1.1

tic()

{!parallel for}

for i=0..size(im,0)-1

for j=0..size(im,1)-1

for k=0..size(im,2)-1

im_out[i,j,k] = im[i,j,k]^gamma

endfor

endfor

endfor

toc()

fig1 = imshow(im)

fig2 = imshow(im_out)

fig1.connect(fig2)Without {!parallel for}, the for-loops are interpreted (which takes significantly more time than without). Even though the Quasar->EXE compiler is improving to handle this in a more reasonable time, it is best to be aware of this issue. Therefore this warning message is raised.

To solve this problem (i.e. to improve the performance), you can either:

turn on the automatic for-loop parallelizer in Program Settings in Redshift

use

{!parallel for}in case there are no dependenciesuse

{!serial for}in case of dependencies

Note that the following conditions must be met:

The for-loop may not contain

parallel_docallsThe for-loop may not contain function calls (unless the functions are declared with the

__device__specifier)No dynamic memory may be allocated from the for-loop (e.g.

zeros,ones,randn,uninit, …). Note that it is still possible to create vectors of a fixed length <= 16 (for examplezeros(8),ones(3)). These vectors are stored in local GPU memory (registers).

Notes

{!parallel for}is a shorthand for the previous#pragma force_parallel(which is deprecated now).{!serial for}is a shorthand for the previous#pragma force_serial(which is deprecated now).- in some cases (e.g. for debugging purposes), it may be useful to indicate that the loop is interpreted. This can be obtained using the code attribute

{!interpreted for}. # Parallel Reduction Patterns

An often recurring programming idiom is the use of atomic operations for data aggregation (e.g. to calculate a sum). I noted this when inspecting the code from several Quasar users. In the most simple form, this idiom is as follows (called the JDV variant):

total = 0.0

#pragma force_parallel

for m=0..511

for n=0..511

total += im[m,n]

endfor

endforHowever, it could also be more sophisticated as well (called the HQL variant):

A = zeros(2,2)

#pragma force_parallel

for i=0..255

A[0,0] += x[i,0]*y[i,0]

A[0,1] += x[i,0]*y[i,1]

A[1,0] += x[i,1]*y[i,0]

A[1,1] += x[i,1]*y[i,1]

endforHere, the accumulator variables are matrix elements, also multiple accumulators are used inside a for loop.

Even though this code is correct, the atomic add (+=) may result in a poor performance on GPU devices, due to all adds being serialized in the hardware (all threads need to write to the same location in memory, so there is a spin-lock that basically serializes all the memory write accesses). The performance is often much worse than performing all operations in serial!

The obvious solution is the use of shared memory, thread synchronization in combination with parallel reduction patterns. I found that such algorithms are actually quite hard to write well, taking all side-effects in consideration, such as register pressure, shared memory pressure. To avoid Quasar users from writing these more sophisticated algorithms, the Quasar compiler now detects the above pattern, under the following conditions:

- All accumulator expressions (e.g.

total,A[0,0]) should be 1) variables, 2) expressions with constant numeric indices or 3) expressions with indices whose value does not change during the for-loop.

- The accumulator variables should be scalar numbers. Complex-valued numbers and fixed-length vectors are currently not (yet) supported.

- Only full dimensional parallel reductions are currently supported. A sum along the rows or columns can not be handled yet.

- There is an upper limit on the number of accumulators (due to the size limit of the shared memory). For 32-bit floating point, up to 32 accumulators and for 64-bit floating point, up to 16 accumulators are supported. When the upper limit is exceeded, the generated code will still work, but the block size will silently be reduced. This, together with the impact on the occupancy (due to high number of registers being used) might lead to a performance degradation.

- Several atomic operations are supported:

+=(add),-=(subtract),*=(multiply),~=(bitwise exclusive OR),|=(bitwise OR),&=(bitwise AND),__=(minimum) and^^=(maximum).

For the first example, the loop transformer will generate the following code:

function total:scalar = __kernel__ opt__for_test1_kernel(im:mat,$datadims:ivec2,blkpos:ivec2,blkdim:ivec2)

% NOTE: the for-loop on line 14 was optimized using the parallel reduction loop transform.

$bins=shared(blkdim[0],blkdim[1],1)

$accum0=0

$m=blkpos[0]

while ($m<$datadims[0])

$n=blkpos[1]

while ($n<$datadims[1])

$accum0+=im[$m,$n]

$n+=blkdim[1]

endwhile

$m+=blkdim[0]

endwhile

$bins[blkpos[0],blkpos[1],0]=$accum0

syncthreads

$bit=1

while ($bit<blkdim[0])

if (mod(blkpos[0],(2*$bit))==0)

$bins[blkpos[0],blkpos[1],0]=($bins[blkpos[0],blkpos[1],0]+

$bins[(blkpos[0]+$bit),blkpos[1],0])

endif

syncthreads

$bit*=2

endwhile

$bit=1

while ($bit<blkdim[1])

if (mod(blkpos[1],(2*$bit))==0)

$bins[blkpos[0],blkpos[1],0]=($bins[blkpos[0],blkpos[1],0]+

$bins[blkpos[0],(blkpos[1]+$bit),0])

endif

syncthreads

$bit*=2

endwhile

if (sum(blkpos)==0)

total+=$bins[0,0,0]

endif

endfunction

$blksz=max_block_size(opt__for_test1_kernel,min([16,32],[512,512]))

total=parallel_do([$blksz,$blksz],im,[512,512],opt__for_test1_kernel)Note that variables starting with $ are only used internally by the compiler, so please do not use them yourself.

Some results (NVidia Geforce 435M), for 100 iterations:

#pragma force_parallel (atomic add): 609 ms

#pragma force_serial: 675 ms

#pragma force_parallel (reduction pattern): 137 ms (NEW)So in this case, the parallel reduction pattern results in code that is about 4x-5x faster.

Conclusion: 5x less code and 5x faster computation time!

Automatic serialization of for-loops:

(Note: serialization is not to be confused with the serialization of data to a binary stream. This operation is performed by the function save)

The Quasar compiler will now automatically serialize for-loops using the C++ code generation back-end whenever a read/write dependency is detected.

In the following example, there is a dependency between different runs of the loop on “a” (each time, a is multiplied by 0.9999). The compiler will detect this dependency, and will generate serial code (equivalent to force_serial) for this for-loop. The serial code will then be executed on the CPU.

a = 1.0

y = zeros(1e6)

for k = 0..numel(y)-1

a = a * 0.9999

y[k] = a

endfor

plot(y[0..9999])The compiler outputs:

Warning: auto_serialize.q - line 11: Warnings during parallelization of the 1-dim FOR loop.

Line 13 - 'a': possible data dependency detected for variable! (type 'scalar'). Switching to serialization.

Optimization: auto_serialize.q - line 11: Automatic serialization of the 1-dim FOR loop.Practically, this means that it will be easier for you to earn some new medals:-) # Recursive lambda expressions

In the past

Originally, the conditional IF x ? a : b was implemented using a function x ? a : b = cond_if(x,a,b). This had the consequence that both a and b needed to be evaluated, in other to compute the end result.

This may have undesired side-effects, for example in case of recursive functions, such as the factorial function:

fact = x -> x == 1 ? 1 : x * fact(x - 1)When implementing this in Quasar, this would inevitably lead to a stack overflow exception, because the evaluation of fact(x) would never end.

A temporary workaround was by introducing the following reduction:

reduction (a, b : scalar, c : scalar) -> (a ? b : c) = (a? (()->b) : (()->c))()Reductions is a nice mechanism to extend the functionality and expressiveness of Quasar. What is happening here is that the different expressions a and b are wrapped into a lambda expression, with 0 arguments. This is as simple as defining ()->b and ()->c. Next, the condition chooses which of both functions to use, and finally the evaluation is performed (...()).

One of the problems is that this reduction needed to be defined for every data type (not only scalar). Omitting the type scalar would on its own lead to a circular reduction definition, for which a compiler error is generated.

Now

Because a conditional IF for which not all arguments need to be evaluated is quite important, the Quasar interpreter has now built-in support for conditional evaluation that effectively solves the above problems. This means that the above reduction is no longer needed. So it is safe to write recursive functions as:

fact = x -> x == 1 ? 1 : x * fact(x - 1)

fib = n -> (n==1 || n==2) ? 1 : fib(n-1) + fib(n-2)The conditional IF is also used in the implementation of reductions with where clauses (see also Reduction where clauses).

Matrix Conditional Assignment

In MATLAB, it is fairly simple to assign to a subset of a matrix, for example, the values that satisfy a given condition. For example, saturation can be obtained as follows:

A[A < 0] = 0

A[A > 1] = 1In Quasar, this can be achieved with:

A = saturate(A)However, the situation can be more complex and then there is no direct equivalent to MATLAB. For example,

A[A > B] = Cwhere A, B, C are all matrices. The trick is to define a reduction (now in system.q):

type matrix_type : [vec|mat|cube|cvec|cmat|ccube]

reduction (x : matrix_type, a : matrix_type, b, c) -> (x[a > b] = c) = (x += (c - x) .* (a > b))

reduction (x : matrix_type, a : matrix_type, b, c) -> (x[a < b] = c) = (x += (c - x) .* (a < b))

reduction (x : matrix_type, a : matrix_type, b, c) -> (x[a <= b] = c) = (x += (c - x) .* (a <= b))

reduction (x : matrix_type, a : matrix_type, b, c) -> (x[a >= b] = c) = (x += (c - x) .* (a >= b))

reduction (x : matrix_type, a : matrix_type, b, c) -> (x[a == b] = c) = (x += (c - x) .* (a == b))

reduction (x : matrix_type, a : matrix_type, b, c) -> (x[a != b] = c) = (x += (c - x) .* (a != b))

reduction (x : matrix_type, a : matrix_type, b, c) -> (x[a && b] = c) = (x += (c - x) .* (a && b))

reduction (x : matrix_type, a : matrix_type, b, c) -> (x[a || b] = c) = (x += (c - x) .* (a || b))The first line defines a “general” matrix type, then is then used for the subsequent reductions. The reduction simply works on patterns of the form:

x[a #op# b] = cand replaces them by the appropriate Quasar expression. The last two reductions are a trick, to get the conditional assignment also working with boolean expressions, such as:

A[A<-0.1 || A>0.1] = 5Note that, on the other hand:

B = A[A<-0.1]will currently result in a runtime error (this syntax is not defined yet).

Matrix data types and type inference

Quasar is an array language, this means that array types (vec, mat and cube) are primitive types and have built-in support (for example, this is in contrast with C/C++ where the user has to define it’s own matrix classes).

The reason for the built-in support is of course that this enables easier mapping of Quasar programs to different parallel devices (GPU, …). Moreover, the user is forced to use one representation for its data (rather than using different class libraries, where it is necessary to wrap one matrix class into another matrix class).

On the other hand, by default Quasar abstracts numeric values into one data type scalar. The type scalar just represents a scalar number, and whether this is a floating point number or a fix point number with 16/32/64-bit precision is actually implementation specific (note currently the Quasar runtime system only supports 32-bit and 64-bit floating point numbers).

Type parameters

For efficiency reasons, there is also support for integer data types int, int8, int16, int32, int64, uint8, uint16, uint32, uint64. (Please note that using 64-bit types can suffer from precision errors, because all the calculations are performed in scalar format). To support matrices built of these types, the array types vec, mat and cube are parametric, for example

vec[int8]denotes a vector (1D array) of 8-bit signed integerscube[int]denotes a cube (3D array) of signed integers (note: by default, int is 32-bit).

To simplify the types (and to reduce key strokes while programming), there are a number of built-in type aliases:

type vec : vec[scalar] % real-valued vector

type cvec : vec[cscalar] % complex-valued vector

type mat : mat[scalar] % real-valued vector

type cmat : mat[cscalar] % complex-valued vector

type cube : cube[scalar] % real-valued vector

type ccube : cube[cscalar] % complex-valued vectorPlease note that these types are just aliases! For example, cube is just cube[scalar] and not cube[something else]:

a = cube[scalar](10)

assert(type(a, "cube")) % Successful

b = cube[int](10)

assert(type(b, "cube")) % Unsuccessful - compiler errorHowever, in case the intention is to check whether a or b is a 3D array regardless of the element type, the special ?? type can be used:

b = cube[int](10)

assert(type(b, "cube[??]")) % SuccessfulType inference

When the type is not specified (for example data that is read dynamically from a file, using the load("data.qd") function), the default data type is ‘??’. This is a very generic type, every type comparison with ?? results in TRUE. For example:

assert(type(1i+1, '??'))

assert(type([1,2,3], '??'))However, using variables of type ?? will prevent the compiler to optimize whole operations (for example, applying reductions or automatically generating kernel functions for for-loops). Therefore, it is generally a bad idea to have functions return variables of unspecified type ‘??’ and correspondingly the compiler gives a warning message when this is the case.

Practically, the type inference starts from the matrix creation functions zeros, ones, imread, … that have a built-in mechanism for deciding the type of the result (based on the parameters of the function).

For example:

A = zeros([1,1,4])creates a vector of length 4 (vec)B = zeros([2,3])creates a matrix of dimensions2 x 3(mat).C = imread("data.tif")creates acubeat all times.

Note that the type inference also works when a variable is passed to the matrix creation functions:

sz = [1,1,4]; A = zeros(sz)

In this case, the compiler knows that sz is a constant vector, it keeps track of the value and uses it for determining the type of zeros.

However: the compiler cannot do this when the variable sz is passed as argument of a function:

function A = create_data(sz)

A = zeros(sz)

endfunctionIn this case, because the type of sz is unknown, the compiler cannot determine the type of A and will therefore use the default type ??. For convenience, the compiler then also generates a warning message “could not determine the type of output argument A”. The solution is then simply to specify the type of sz:

function A = create_data(sz : ivec2)

A = zeros(sz)

endfunctionThis way, the compiler knows that sz is a vector of length 2, and can deduce the type of A, which is a matrix (mat).

Summary

The type system can be summarized as follows. There are 6 categories of types:

Primitive scalar types

scalar,cscalar,int,int8, …Matrix types

vec,mat,cubewith parametrized versions

vec[??],mat[??],cube[??].Classes:

type R : class/type T : mutable classFunction types

[?? -> ??],[(??,??)->(??,??)], …Device functions:

[__device__ ?? -> ??]Kernel functions:[__kernel__ ?? -> ??]Individual types

typeType classes:

T : [scalar|mat|cube]

Finally, different types can be combined to define new types.

Exercise:

Figure out what the following type means:

type X : [vec[ [??->[int|mat|cube[??->??] ] | int -> ?? | __device__ mat->() ] | cscalar ]Just kidding;-)

Matrix multiplication exploiting CUDA’s block-based architecture

Matrix multiplication in CUDA is so much fun that some people write books on this topic (see http://www.shodor.org/media/content//petascale/materials/UPModules/matrixMultiplication/moduleDocument.pdf). The following is the block-based solution proposed by NVidia. The solution exploits shared memory to reduce the number of accesses to global memory.

__global__ void MatMulKernel(Matrix A, Matrix B, Matrix C)

{

// Block row and column

int blockRow = blockIdx.y, blockCol = blockIdx.x;

// Each thread block computes one sub-matrix Csub of C

Matrix Csub = GetSubMatrix(C, blockRow, blockCol);

// Each thread computes 1 element of Csub accumulating results into Cvalue

float Cvalue = 0.0;

// Thread row and column within Csub

int row = threadIdx.y, col = threadIdx.x;

// Loop over all the sub-matrices of A and B required to compute Csub

for (int m = 0; m < (A.width / BLOCK_SIZE); ++m)

{

// Get sub-matrices Asub of A and Bsub of B

Matrix Asub = GetSubMatrix(A, blockRow, m);

Matrix Bsub = GetSubMatrix(B, m, blockCol);

// Shared memory used to store Asub and Bsub respectively

__shared__ float As[BLOCK_SIZE][BLOCK_SIZE];

__shared__ float Bs[BLOCK_SIZE][BLOCK_SIZE];

// Load Asub and Bsub from device memory to shared memory

// Each thread loads one element of each sub-matrix

As[row][col] = GetElement(Asub, row, col);

Bs[row][col] = GetElement(Bsub, row, col);

__syncthreads();

// Multiply Asub and Bsub together

for (int e = 0; e < BLOCK_SIZE; ++e)

Cvalue += As[row][e] * Bs[e][col];

__syncthreads();

}

// Each thread writes one element of Csub to memory

SetElement(Csub, row, col, Cvalue);

}(Note - some functions omitted for clarity)

However, this implementation is only efficient when the number of rows of matrix A is about the same as the number of cols of A. In other cases, performance is not optimal. Second, there is the issue that this version expects that the matrix dimensions are a multiple of BLOCK_SIZE. Why use a 3x3 matrix if we can have a 16x16?

In fact, there are 3 cases that need to be considered (let n < N):

nxNxNxn: The resulting matrix is small: in this case, it is best to use the parallel sum algorithm.NxNxNxN: The number of rows/cols of A are more or less equal: use the above block-based algorithm.NxnxnxN: The resulting matrix is large: it is not beneficial to use shared memory.

The following example illustrates this approach in Quasar:

% Dense matrix multiplication - v2.0

function C = dense_multiply(A : mat, B : mat)

% Algorithm 1 - is well suited for calculating products of

% large matrices that have a small matrix as end result.

function [] = __kernel__ kernel1(a : mat'unchecked, b : mat'unchecked, c : mat'unchecked, _

blkdim : ivec3, blkpos : ivec3)

n = size(a,1)

bins = shared(blkdim)

nblocks = int(ceil(n/blkdim[0]))

% step 1 - parallel sum

val = 0.0

for m=0..nblocks-1

if blkpos[0] + m*blkdim[0] < n % Note - omitting [0] gives error

d = blkpos[0] + m*blkdim[0]

val += a[blkpos[1],d] * b[d,blkpos[2]]

endif

endfor

bins[blkpos] = val

% step 2 - reduction

syncthreads

bit = 1

while bit < blkdim[0]

if mod(blkpos[0],bit*2) == 0

bins[blkpos] += bins[blkpos + [bit,0,0]]

endif

syncthreads

bit *= 2

endwhile

% write output

if blkpos[0] == 0

c[blkpos[1],blkpos[2]] = bins[0,blkpos[1],blkpos[2]]

endif

endfunction

% Algorithm 2 - the block-based algorithm, as described in the CUDA manual

function [] = __kernel__ kernel2(A : mat'unchecked, B : mat'unchecked, C : mat'unchecked, _

BLOCK_SIZE : int, pos : ivec2, blkpos : ivec2, blkdim : ivec2)

% A[pos[0],m] * B[m,pos[1]]

sA = shared(blkdim[0],BLOCK_SIZE)

sB = shared(BLOCK_SIZE,blkdim[1])

sum = 0.0

for m = 0..BLOCK_SIZE..size(A,1)-1

% Copy submatrix

for n = blkpos[1]..blkdim[1]..BLOCK_SIZE-1

sA[blkpos[0],n] = pos[0] < size(A,0) && m+n < size(A,1) ? A[pos[0],m+n] : 0.0

endfor

for n = blkpos[0]..blkdim[0]..BLOCK_SIZE-1

sB[n,blkpos[1]] = m+n < size(B,0) && pos[1] < size(B,1) ? B[m+n,pos[1]] : 0.0

endfor

syncthreads

% Compute the product of the two submatrices

for n = 0..BLOCK_SIZE-1

sum += sA[blkpos[0],n] * sB[n,blkpos[1]]

endfor

syncthreads

endfor

if pos[0] < size(C,0) && pos[1] < size(C,1)

C[pos] = sum % Write the result

endif

endfunction

% Algorithm 3 - the most straightforward algorithm

function [] = __kernel__ kernel3(A : mat'unchecked, B : mat'unchecked, C : mat'unchecked, _

pos : ivec2)

sum = 0.0

for m=0..size(A,1)-1

sum += A[pos[0],m]*B[m,pos[1]]

endfor

C[pos] = sum

endfunction

[M,N] = [size(A,0),size(B,1)]

C = zeros(M,N)

if M <= 4

P = prevpow2(max_block_size(kernel1,[size(A,1),M*N])[0])

parallel_do([P,M,N],A,B,C,kernel1)

elseif size(A,1)>=8 && M >= 8

P = min(32, prevpow2(size(A,1)))

blk_size = max_block_size(kernel2,[32,32])

sz = ceil(size(C,0..1) ./ blk_size) .* blk_size

parallel_do([sz,blk_size],A,B,C,P,kernel2)

else

parallel_do(size(C),A,B,C,kernel3)

endif

endfunctionAssertions in kernel code

Assertions can be put inside a kernel function:

function [] = __kernel__ kernel (pos : ivec3)

b = 2

assert(b==3)

endfunctionIn this example, the assertion obviously fails. Quasar breaks with the following error message:

(parallel_do) test_kernel - assertion failed: line 23Also see CUDA Error handling for more information about assertions and error handling.

Functions in Quasar

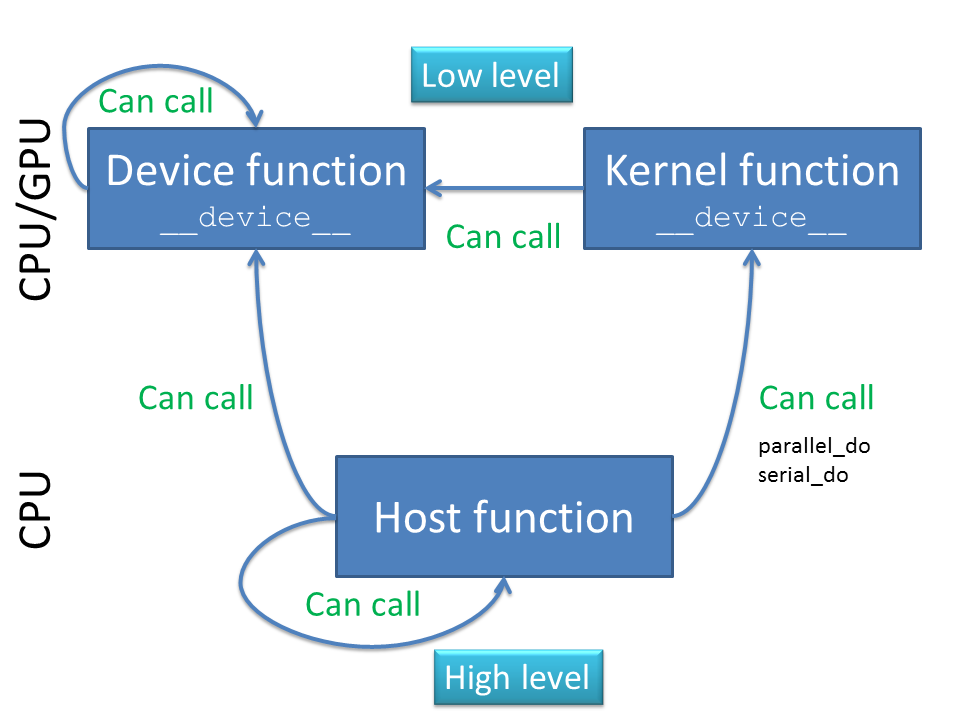

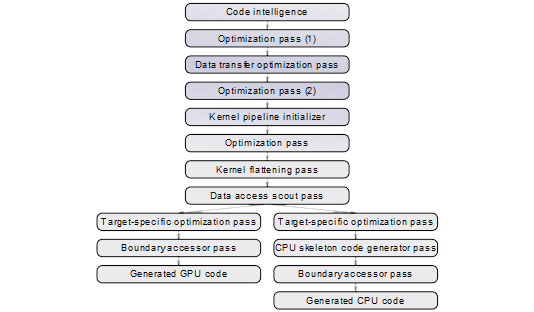

The following diagram illustrates the relationship between __kernel__, __device__ and host functions:

Summarized:

Both

__kernel__and__device__functions are low-level functions, they are natively compiled for CPU and/or GPU. This has the practical consequence that the functionality available for these functions is restricted. It is for example not possible toprint,loadorsaveinformation inside kernel or device functions.Host functions are high-level functions, typically they are interpreted (or Quasar EXE’s, compiled using the just-in-time compiler).

A kernel function is normally repeated for every element of a matrix. Kernel functions can only be called from host code (although in future support for CUDA 5.0 dynamic parallelism, this may change).

A device function can be called from host code, in which case it is normally interpreted (if not inlined), or from other device/kernel functions, in which case it is natively compiled.

The distinction between these three types of functions is necessary to allow GPU programming. Furthermore, it provides a mechanism (to some extent) to balance the work between CPU/GPU. As programmer, you know whether the code inside the function will be run on GPU/CPU. # Multi-level breaks in sequential loops

Sometimes, small language features can make a lot of difference (in terms of code readability, productivity etc.). In Quasar, multi-dimensional for-loops are quite common. Recently, I came across a missing feature for dealing with multi-dimensional loops.

Suppose we have a multi-dimensional for-loop, as in the following example:

for m=0..511

for n=0..511

im_out[m,n] = 255-im[m,n]

if m==128

break

endif

a = 4

endfor

endforSuppose that we want to break outside the loop, as in the above code. This is useful for stopping the processing at a certain point. There is only one caveat: the break-statement only applies to the loop that surrounds it. In the above example, the processing of row 128 is simply stopped at column 0 (the loop over n is interrupted), but it is then resumed starting from row 129. Some programmers are not aware of this, sometimes this can lead to less efficient code, as in the following example:

for j = 0..size(V,0)-1

for k=0..size(V,1)-1

if V[j,k]

found=[j,k]

break

endif

endfor

endforHere we perform a sequential search, to find the first matrix element for which V[j,k] != 0. When this matrix element is found, the search is stopped. However, because the break statement stops the inner loop, the outer loop is still executed several times (potentially leading to a performance degradation).

1. Solution with extra variables

To make sure that we break outside the outer loop, we would have to introduce an extra variable:

break_outer = false

for j = 0..size(V,0)-1

for k=0..size(V,1)-1

if V[j,k]

found=[j,k]

break_outer = true

break

endif

endfor

if break_outer

break

endif

endforIt is clear that this approach is not very readible. The additional variable break_outer is also a bit problematic (in the worst case, if the compiler can not filter it out, extra stack memory/registers will be required).

2. Encapsulation in a function

An obvious alternative is the use of a function:

function found = my_func()

for j = 0..size(V,0)-1

for k=0..size(V,1)-1

if V[j,k]

found=[j,k]

break

endif

endfor

endfor

endfunction

found = my_func()However, the use of function is sometimes not desired for this case. It also involves extra work, such as adding the input/output parameters and adding a function call.

3. New solution: labeling loops

To avoid the above problems, it is now possible to label the for loops (as in e.g. ADA, java):

outer_loop:

for j = 0..size(V,0)-1

inner_loop:

for k=0..size(V,1)-1

if V[j,k]

found=[j,k]

break outer_loop

endif

endfor

endfor

Providing labels to for-loops is optional, i.e. you only have to do it when it is needed. The new syntax is also supported by the following finding in programming literature: > In 1973 S. Rao Kosaraju refined the structured program theorem by proving that it’s possible to avoid adding additional variables in structured programming, as long as arbitrary-depth, multi-level breaks from loops are allowed. [11]

Note that Quasar has no goto labels (it will never have). The reasons are:

- Control flow blocks can always be used instead. Control flow blocks offer more visual cues which enhances the readability of the code.

- At the compiler-level, goto labels may make it more difficult to optimize certain operations (e.g. jumps to different scopes).

Remarks:

- This applies to the keyword

continueas well. - Labels can be applied to

for,repeat…untilandwhileloops. - In the future, more compiler functionality may be added to make use of the loop labels. For example, it may be possible to indicate that multiple loops (with the specified names) must be merged.

- It is not possible to break outside a parallel loop! The reason is that the execution of the different threads is (usually) non-deterministic, hence using breaks in parallel-loops would result in non-deterministic results.

- However, loop labels can be attached to either serial/parallel loops. A useful situation is an iterative algorithm with an inner/outer loop. # Placeholder ’_’ for functions with multiple return values

(feature originally suggested by Dirk Van Haerenborgh)

In Quasar, it is possible to define functions with multiple return values. An example is the sincos function which calculates the sine and the cosine of an angle simultaneously:

function [s, c] = sincos(theta)

...

endfunctionSometimes, your function may return several values, such as an image and a corresponding weight matrix:

function [img, weight] = process(input_img)

...

endfunctionand it may happen that the weight image is in practice not needed on the caller side. Instead of introducing dummy variables, it is now possible to explicitly indicate that a return value is not needed (e.g. as in Python):

[img, _] = process(input_img)This way, the Quasar compiler is aware that it is not your intention to do something with the unused return value. In the future, it may even be possible that the output variable weight is optimized away (and not even calculated inside the function process).

In case you would - for some reason - forget to capture the return values, such as:

process(input_img)Then the compiler will generate a warning message, stating:

[Performance warning] Function ‘process’ returns 2 values that are ignored here. Please eliminate the return values in the function signature (when possible) or use the placeholder ‘[_,_]=process(.)’ to explicitly capture the unused return values.

In this case, it is recommended that you explicitly indicate the return values that are unused:

[_,_] = process(input_img)Variadic Functions and the Spread Operator

A new feature is the support for variadic functions in Quasar. Variadic functions are functions that can have a variable number of arguments. For example,

function [] = func(... args)

for i=0..numel(args)-1

print args[i]

endfor

endfunction

func(1, 2, "hello")Here, args is called a rest parameter (which is similar to ECMAScript 6). How does this work: when the function func is called, all arguments are packed in a cell vector which is passed to the function. Optionally, it is possible to specify the types of the arguments:

function [] = func(... args:vec[string])which indicates that every argument must be a string, so that the resulting cell vector is a vector of strings.

Several library functions in Quasar already support variadic arguments (e.g. print, plot, …), although now it is possible to define your own functions with variadic arguments.

Moreover, a function may have a set of fixed function parameters, optional function parameters and variadic parameters. The variadic parameters should always appear at the end of the function list (otherwise a compiler error will be generated)

function [] = func(a, b, opt1=1.0, opt2=2.0, ...args)

endfunctionThis way, the caller of func can specify extra arguments when desired. This allows adding extra options for e.g., solvers.

Variadic device functions

It is also possible to define device functions supporting variadic arguments. These functions will be translated by the back-end compilers to use cell vectors with dynamically allocated memory (it is useful to consider that this may have a small performance cost).

An example:

function sum = __device__ mysum(... args:vec)

sum = 0.0

for i=0..numel(args)-1

sum += args[i]

endfor

endfunction

function [] = __kernel__ mykernel(y : vec, pos : int)

y[pos]= mysum(11.0, 2.0, 3.0, 4.0)

endfunctionNote that variadic kernel functions are currently not supported.

Variadic function types

Variadic function types can be specified as follows:

fn : [(...??) -> ()]

fn2 : [(scalar, ...vec[scalar]) -> ()]This way, functions can be declared that expect variadic functions:

function [] = helper(custom_print : [(...??) -> ()])

custom_print("Stage", 1)

...

custom_print("Stage", 2)

endfunction

function [] = myprint(...args)

for i=0..numel(args)-1

fprintf(f, "%s", args[i])

endfor

endfunction

helper(myprint)The spread operator

Unpacking vectors The spread operator unpacks one-dimensional vectors, allowing them to be used as function arguments or array indexers. For example:

pos = [1, 2]

x = im[...pos, 0]In the last line, the vector pos is unpacked to [pos[0], pos[1]], so that the last line is in fact equivalent with

x = im[pos[0], pos[1], 0]Note that the spread syntax ... makes the writing of the indexing operation a lot more convenient. An additional advantage is that the spread operator can be used, without knowing the length of the vector pos. Assume that you have a kernel function in which the dimension is not specified:

function [] = __kernel__ colortransform (X, Y, pos)

Y[...pos, 0..2] = RGB2YUV(Y[...pos, 0..2])

endfunctionThis way, the colortransform can be applied to a 2D RGB image, as well as a 3D RGB image.

Similarly, if you have a function taking three arguments, such as:

luminance = (R,G,B) -> 0.2126 * R + 0.7152 * G + 0.0722 * BThen, typically, to pass an RGB vector c to the function luminance, you would use:

c = [128, 42, 96]

luminance(c[0],c[1],c[2])Using the spread operator, this can simply be done as follows:

luminance(...c) Passing variadic arguments The spread operator also has a role when passing arguments to functions.

Consider the following function which returns two output values:

function [a,b] = swap(A,B)

[a,b] = [B,A]

endfunctionAnd we wish to pass both output values to one function

function [] = process(a, b)

...

endfunctionThen using the spread operator, this can be done in one line:

process(...swap(A,B))Here, the multiple values [a,b] are unpacked before they are passed to the function process. This feature is particularly useful in combination with variadic functions.

Notes:

Only vectors (i.e., with dimension 1) can currently be unpacked using the spread operator. This may change in the future.

Within kernel/device functions, the spread operator is currently supported on fixed-length vectors

vecX,cvecX,ivecX(this means: the compiler should be able to determine the length of the vector statically).Within host functions, cell vectors can be unpacked as well

The spread operator can be used for concatenating vectors and scalars:

a = [1,2,3,4] b = [6,7,8] c = [...a, 4, ...b]where

cwill be a vector of length 8. For small vectors, this is certainly a good approach. For long vectors, this technique may have a poor performance, due to the concatenation being performed on the CPU. In the future, the automatic kernel generator may be extended, to generate efficient kernel functions for the concatenation.

Variadic output parameters

The output parameter list does not support the variadic syntax .... Instead, it is possible to return a cell vector of a variable length.

function [args] = func_returning_variadicargs()

args = vec[??](10)

args[0] = ...

endfunctionThe resulting values can then be captured in the standard way as output parameters:

a = func_returning_variadicargs() % Captures the cell vector

[a] = func_variadicargs() % Captures the first element, and generates an

% error if more than one element is returned

[a, b] = func_variadicargs() % Captures the first and second elements and

% generates an error if more than one element

% is returnedAdditionally, using the spread operator, the output parameter list can be unpacked and passed to any function:

myprint(...func_variadicargs())The main function revisited

The main function of a Quasar program can be variadic, i.e. accepting a variable number of parameters.

function [] = main(...args)or

function [] = main(...args : vec[??])This way, parameters can be passed through the command-line:

Quasar.exe myprog.q 1 2 3 "string"There are in fact 4 possibilities:

main functions with fixed parameters

function [] = main(a, b)In this case, the user is required to specify the values for the parameters

main functions with fixed and/or optional parameters

function [] = main(a=0.0, b=0.5)This way, the programmer can specify a default value for the parameters

main functions with fixed/optional/variadic parameters

function [] = main(fixed, a=0.0, b=0.5, ...args)Using this approach, some of the parameters are mandatory, other parameters are optional. Additionally it is possible to pass extra values to the main function, which the program will process. # Construction of cell matrices

In Quasar, it is possible to construct cell vectors/matrices, similar to in MATLAB:

A = {1,2,3j,zeros(4,4)}Note: the old-fashioned alternative was to construct cell matrices using the function cell, or vec[vec], vec[mat], vec[cube], … For example:

A = cell(4)

A[0] = 1j

A[1] = 2j

A[2] = 3j

A[3] = 4jNote that this notation is not very elegant, compared to A={1j,2j,3j,4j}. Also it does not allow the compiler to fully determine the type of A (the compiler will find type(A) == "vec[??]" rather than type(A) == "cvec"). In the following section, we will discuss the type inference in more detail.

Type inference

Another new feature of the compiler is that it attempts to infer the type from cell matrices. In earlier versions, all cell matrices defined with the above syntax, had type vec[??]. Now, this has changed, as illustrated by the following example:

a = {[1, 2],[1, 2, 3]}

print type(a) % Prints vec[vec[int]]

b = {(x->2*x), (x->3*x), (x->4*x)}

print type(b) % Prints [ [??->??] ]

c = {{[1, 2],[1,2]},{[1, 2, 3],[4, 5, 6]}}

print type(c) % Prints vec[vec[vec[int]]]

d = { [ [2, 1], [1, 2] ], [ [4, 3], [3, 4] ]}

print type(d) % Prints vec[mat]

e = {(x:int->2*x), (x:int->3*x), (x:int->4*x)}

print type(e) % Prints vec[ [int->int] ]This allows cell matrices that are constructed with the above syntax to be used from kernel functions. A simple example:

d = {eye(4), ones(4,4)}

parallel_do(size(d[0]), d,

__kernel__ (d : vec[mat], pos : ivec2) -> d[0][pos] += d[1][pos])

print d[0]The output is:

[ [2,1,1,1],

[1,2,1,1],

[1,1,2,1],

[1,1,1,2] ]Boundary access modes in Quasar (see #23)

In earlier versions of Quasar, the boundary extension modes (such as 'mirror, 'circular) only affected the __kernel__ and __device__ functions.

To improve transparency, this is has recently changed. This has the consequence that the following get access modes needed to be supported by the runtime:

(no modifier) % =error (default) or zero extension (kernel, device function)

safe % zero extension

mirror % mirroring near the boundaries

circular % circular boundary extension

clamped % keep boundary values

unchecked % results undefined when reading outside

checked % error when reading outsideImplementation details: there is a bit of work involved, because it needs to be done for all data types (int8, int16, int32, uint8, uint16, uint32, single, double, UDT, object, …), for different dimensions (vec, mat, cube), and for both matrix getters / slice accessors. perhaps the reason that you will not see this feature implemented in other programming languages: 5 x 10 x 3 x 2 = 300 combinations (=functions to be written). Luckily the use of generics in C# alleviates the problem, reducing it (after a bit of research) to 6 combinations (!) where each algorithm has 3 generic parameters. Idem for CUDA computation engine.

Note that the modifier unchecked should be used with care: only when you are 100% sure that the function is working properly and that there are no memory accesses outside the matrix. A good approach is to use checked first, and when you find out that there never occur any errors, you can switch to unchecked, in other to gain a little speed-up (typically 20%-30% on memory accesses).

Now I would like to point out that the access modifiers are not part of type of the object itself, as the following example illustrates:

A : mat'circular = ones(10, 10)

B = A % boundary access mode: B : mat'circular

C : mat'unchecked = AHere, both B and C will hold a reference to the matrix A. However, B will copy the access modifier from A (through type inference) and C will override the access modifier of A. The result is that the access modifiers for A, B and C are circular, circular and unchecked, respectively. Even though there is only one matrix involved, there are effectively two ways of accessing the data in this matrix.

Now, to make things even more complicated, there are also put access modes. But for implementation complexity reasons (and more importantly, to avoid unforeseen data races in parallel algorithms), the number of options have been reduced to three:

safe (default) % ignore writing outside the boundaries

unchecked % writing outside the boundaries = crash!

checked % error when writing outside the boundariesThis means that 'circular, 'mirror, and 'clamped are mapped to 'safe when writing values to the matrix. For example:

A : vec'circular = [1, 2, 3]

A[-1] = 0 % Value neglectedThe advantage will then be that you can write code such as:

A : mat'circular = imread("lena_big.tif")[:,:,1]

B = zeros(size(A))

B[-127..128,-127..128] = A[-127..128,-127..128]Boundary Access Testing through High Level Inference

To avoid vector/matrix boundary checks, the modifier 'unchecked can be used. This often gives a moderate performance benefit, especially when used extensively inside kernel functions.

The use of 'unchecked requires the programmer to know that the index boundaries will not be exceeded (e.g., after extensive testing with the default, 'checked). To avoid “Segment violations” causing the Redshift GUI to crash, Quasar has a setting “Out of bounds checks” that will internally override the 'unchecked modifiers and convert all these modifiers to 'checked. However, with this technique, the performance benefit of 'unchecked is lost unless the programmer switches off the “Out of bounds checks”.

Solution: compile-time high level inference

As a better solution, the compiler will now infer from the context several cases in which the boundary checks can be dropped. Consider the following use-case:

im = imread("lena_big.tif")[:,:,1]

im_out = zeros(size(im))

for m=0..size(im,0)-1

for n=0..size(im,1)-1

im_out[m,n] = 4*im[m,n]

endfor

endforIn this case, the Quasar compiler knows that the entire image is traversed with the double loop (checking the dimensions size(im,0), size(im,1)). Moreover, because im_out = zeros(size(im)), the compiler internally assumes that size(im_out) == size(im). This way, the compiler can decide that the boundary accesses im_out[m,n] and im[m,n] are all safe and can be dropped! Even when the option “Out of bounds checks” is turned on!

This way, existing Quasar programs can benefit from performance improvements without any further change. In some cases, the programmer can help the compiler by making assertions on the matrix dimensions. For example:

function [] = process(im : mat, im_out : mat)

assert(size(im) == size(im_out))

for m=0..size(im,0)-1

for n=0..size(im,1)-1

im_out[m,n] = 4*im[m,n]

endfor

endfor

endfunctionWhat basically happens, is that the boundary checks are moved outside the loop: the assertion will check the dimensions of im_out and assure that the two-dimensional loop is safe! The assertion check outside the loop is much faster than boundary checks for every position inside the loop.

Currently, the inference possibilities of the compiler are limited to simple (but often occurring) use-cases. In the future, there will be more cases that the compiler can recognize. # Dynamic Memory Allocation on CPU/GPU

In some algorithms, it is desirable to dynamically allocate memory inside __kernel__ or __device__ functions, for example:

When the algorithm processes blocks of data for which the maximum size is not known in advance.

When the amount of memory that an individual thread uses is too large to fit in the shared memory or in the registers. The shared memory of the GPU consists of typically 32K, that has to be shared between all threads in one block. For single-precision floating point vectors

vecor matricesmatand for 1024 threads per block, the maximum amount of shared memory is 32K/(1024*4) = 8 elements. The size of the register memory is also of the same order: 32 K for CUDA compute architecture 2.0.

Example: brute-force median filter

So, suppose that we want to calculate a brute-force median filter for an image (note that there exist much more efficient algorithms based on image histograms, see immedfilt.q). The filter could be implemented as follows:

we extract a local window per pixel in the image (for example of size

13x13).the local window is then passed to a generic median function, that sorts the intensities in the local window and returns the median.

The problem is that there may not be enough register memory for holding a local window of this size. 1024 threads x 13 x 13 x 4 = 692K!

The solution is then to use a new Quasar runtime & compiler feature: dynamic kernel memory. In practice, this is actually very simple: first ensure that the compiler setting “kernel dynamic memory support” is enabled. Second, matrices can then be allocated through the regular matrix functions zeros, complex(zeros(.)), uninit, ones.

For the median filter, the implementation could be as follows:

% Function: median

% Computes the median of an array of numbers

function y = __device__ median(x : vec)

% to be completed

endfunction

% Function: immedfilt_kernel

% Naive implementation of a median filter on images

function y = __kernel__ immedfilt_kernel(x : mat, y : mat, W : int, pos : ivec2)

% Allocate dynamic memory (note that the size depends on W,

% which is a parameter for this function)

r = zeros((W*2)^2)

for m=-W..W-1

for n=-W..W-1

r[(W+m)*(2*W)+W+n] = x[pos+[m,n]]

endfor

endfor

% Compute the median of the elements in the vector r

y[pos] = median(r)

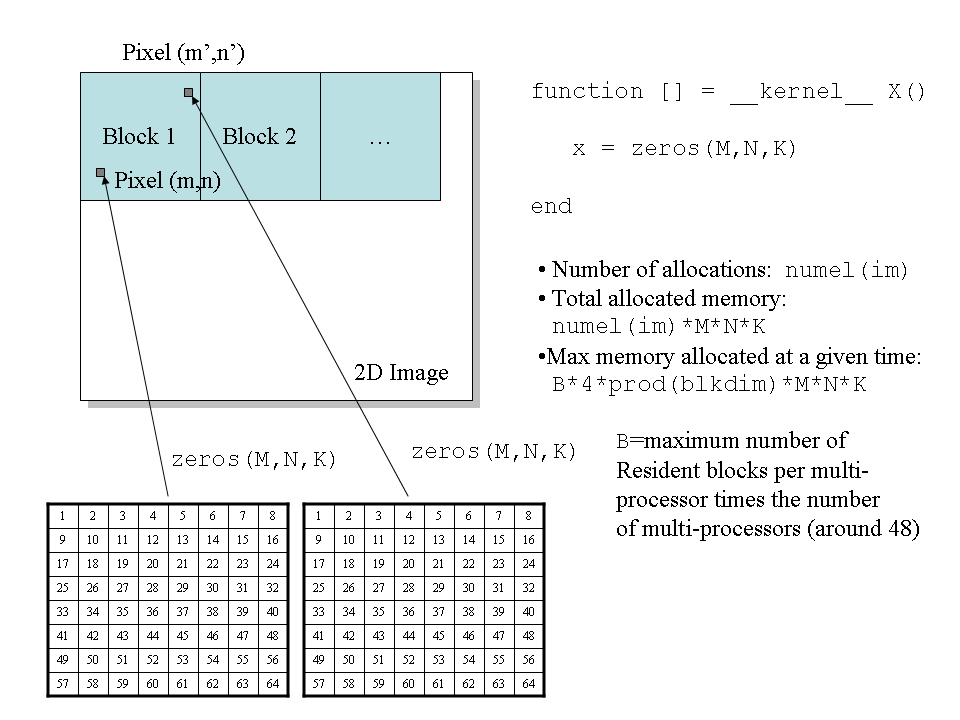

endfunctionFor W=4 this algorithm is illustrated in the figure below:

Figure 1. dynamic memory allocation inside a kernel function.

Figure 1. dynamic memory allocation inside a kernel function.

Parallel memory allocation algorithm

To support dynamic memory, a special parallel memory allocator was designed. The allocator has the following properties:

The allocation/disposal is distributed in space and does not use lists/free-lists of any sort.

The allocation algorithm is designed for speed and correctness.

To accomplish such a design, a number of limitations were needed:

The minimal memory block that can be allocated is 1024 bytes. If the block size is smaller then the size is rounded up to 1024 bytes.

When you try to allocate a block with size that is not a pow-2 multiple of 1024 bytes (i.e.

1024*2^NwithNinteger), then the size is rounded up to a pow-2 multiple of 1024 bytes.The maximal memory block that can be allocated is 32768 bytes (=2^15 bytes). Taking into account that this can be done per pixel in an image, this is actually quite a lot!

The total amount of memory that can be allocated from inside a kernel function is also limited (typically 16 MB). This restriction is mainly to ensure program correctness and to keep memory free for other processes in the system.

It is possible to compute an upper bound for the amount of memory that will be allocated at a given point in time. Suppose that we have a kernel function, that allocates a cube of size M*N*K, then:

max_memory = NUM_MULTIPROC * MAX_RESIDENT_BLOCKS * prod(blkdim) * 4*M*N*KWhere prod(blkdim) is the number of elements in one block, MAX_RESIDENT_BLOCKS is the maximal number of resident blocks per multi-processor and NUM_MULTIPROC is the number of multiprocessors.

So, suppose that we allocate a matrix of size 8x8 on a Geforce 660Ti then:

max_memory = 5 * 16 * 512 * 4 * 8 * 8 = 10.4 MBThis is still much smaller than what would be needed if one would consider pre-allocation (in this case this number would depend on the image dimensions!)

Comparison to CUDA malloc

CUDA has built-in malloc(.) and free(.) functions that can be called from device/kernel functions, however after a few performance tests and seeing warnings on CUDA forums, I decided not to use them. This is the result of a comparison between the Quasar dynamic memory allocation algorithm and that of NVidia:

Granularity: 1024

Memory size: 33554432

Maximum block size: 32768

Start - test routine

Operation took 183.832443 msec [Quasar]

Operation took 1471.210693 msec [CUDA malloc]

SuccessI obtained similar results for other tests. As you can see, the memory allocation is about 8 times faster using the new apporach than with the NVidia allocator.

Why better avoiding dynamic memory

Even though the memory allocation is quite fast, to obtain the best performance, it is better to avoid dynamic memory:

The main issue is that kernel functions using dynamic memory also require several read/write accesses to the global memory. Because dynamically allocated memory has typically the size of hundreds of KBs, the data will not fit into the cache (of size 16KB to 48KB). Correspondingly: the cost of using dynamic memory is in the associated global memory accesses!

Please note that the compiler-level handling of dynamic memory is currently in development. As long as the memory is “consumed” locally as in the above example, i.e. not written to external data structures the should not be a problem.

CUDA - Device Functions Across Modules

Device functions are useful to implement common CPU and GPU routines once in order to later use them from different other kernel or device functions (also see the function overview).

An example is the sinc function in system.q:

sinc = __device__ (x : scalar) -> (x == 0.0) ? 1.0 : sin(pi*x)/(pi*x)By this definition, the sinc function can be used on scalar numbers from both host functions as kernel/device functions.

However, when a device function is defined in one module and used in an another module, there is one problem for the CUDA engine. The compiler will give the following error:

Cannot currently access device function 'sinc' defined in

'system.q' from 'foo.q'. The reason is that CUDA 4.2 does not

support static linking so device functions must be defined in the

same compilation unit.By default, __device__ functions are statically linked (in C/C++ linker terminology). However, CUDA modules are standalone, which makes it impossible to refer from device functions in one module to another module.

There are however two work-arounds:

the first work-around is to define the function using a lambda expression, by making sure that function inlining is enabled (the compiler setting

COMPILER_LAMBDAEXPRESSION_INLININGshould have valueOnlySuitableorAlways).This way, the function will be expanded inline by the Quasar compiler and the problem is avoided.

the second work-around is to use function pointers to prevent static linking. Obviously, this has an impact on performance, therefore the compiler setting

COMPILER_USEFUNCTIONPOINTERSneeds to be set toAlways(default value =SmartlyAvoid).

If possible, try to define the functions in such a way that device functions are only referred to from the module in which they are defined. If this is not possible/preferred, use work-around #1 (i.e. define the function as a lambda expression and enable automatic function inlining). # CUDA Synchronization on Global Memory

Because the amount of shared memory is limited (typically 32K), one may think that straightforward extension is to use the global memory for inter-thread communication, because the global memory does not have this size restriction (typically it is 1-2GB or more). This would then give a small performance loss compared to shared memory, but the benefit of caching and sharing data between threads could make this option attractive for certain applications.

However, this may be a bit more trickier than expected: in practice, this turns out not to work at all!

Consider for example the following kernel, that performs a 3x3 mean filter where the shared memory has been replaced by global memory (tmp):

tmp = zeros(64,64,3)

function [] = __kernel__ kernel(x : cube, y : cube, pos : vec3, blkpos : vec3, blkdim : vec3)

tmp[blkpos] = x[pos]

if blkpos[0] < 2

tmp[blkpos+[blkdim[0],0,0]] = x[pos+[blkdim[0],0,0]]

endif

if blkpos[1] < 2

tmp[blkpos+[0,blkdim[1],0]] = x[pos+[0,blkdim[1],0]]

endif

if blkpos[0] < 2 && blkpos[1] < 2

tmp[blkpos+[blkdim[0],blkdim[1],0]] = x[pos+[blkdim[0],blkdim[1],0]]

endif

syncthreads

y[pos] = (tmp[blkpos+[0,0,0]] +

tmp[blkpos+[0,1,0]] +

tmp[blkpos+[0,2,0]] +

tmp[blkpos+[1,0,0]] +

tmp[blkpos+[1,1,0]] +

tmp[blkpos+[1,2,0]] +

tmp[blkpos+[2,0,0]] +

tmp[blkpos+[2,1,0]] +

tmp[blkpos+[2,2,0]]) * (1/9)

endfunction

im = imread("lena_big.tif")

im_out = zeros(size(im))

parallel_do(size(im_out),im,im_out,kernel)

Figure 1. Using shared memory: the expected outcome of a 3x3 average filter.

Figure 2. Using global memory: not what one would expect!

Although the algorithm works correctly with shared memory, for global memory the output is highly distorted. What is going on here?

The assumption that is made in case of shared memory, is that the blocks are processed by the GPU completely independently from each other.

However, for obvious performance reasons, this is not the case, and the GPU starts already block #2 while finishing block #1.

Shared memory is a special case, because shared memory actually resides on the multiprocessors, and a thread can only access the shared memory from the multiprocessor that it is running on.

The solution would then be to do to make the global memory access block-dependent. However, doing so would be very architecture-dependent and not portable. So this is not recommended. # CUDA - Dealing with low kernel occupancy issues

I recently discovered an issue that led the runtime to execute a kernel at a low occupancy (NVidia explanation). The problem was quite simple: the multiplication of a (3xN) matrix with an (Nx3) matrix, where N is very large, e.g. 2^18 (resulting in a 3x3 matrix).

The runtime currently uses the simplest possible algorithm for multiplication, using a block of size 3x3. However, the occupancy in this case is approx. 9/MAX_THREADS, which is about 3.33% in my case. This means that the performance of this simple operation is (theoretically) decreased by up to a factor 30! Only 9 threads are spawn, while the hardware can support up to 1024. (Note: in the latest versions of Quasar Redshift, the occupancy can be viewed in the profiler).

For this reason, I wrote a Quasar program to use a larger block size. The program also uses shared memory with the parallel sum reduction, so even the quite simple matrix multiplication results in an advanced implementation:-)

This program will be integrated in the runtime, so in the future, the user does not have to worry about this anymore. Nevertheless, it can be good to know how certain operations are implemented efficiently internally.

function y = matrix_multiply(a, b)

[M,N] = [size(a,0),size(b,1)]

y = zeros(M,N)

function [] = __kernel__ kernel(a : mat'unchecked, b : mat'unchecked, blkdim : ivec3, blkpos : ivec3)

n = size(a,1)

bins = shared(blkdim)

nblocks = int(ceil(n/blkdim[0]))

% step 1 - parallel sum

val = 0.0

for m=0..nblocks-1

if blkpos[0] + m*blkdim[0] < n

c = blkpos[0] + m*blkdim[0]

val += a[blkpos[1],c] * b[c,blkpos[2]]

endif

endfor

bins[blkpos] = val

% step 2 - reduction

syncthreads

bit = 1

while bit < blkdim[0]

if mod(blkpos[0],bit*2) == 0

bins[blkpos] += bins[blkpos + [bit,0,0]]

endif

syncthreads

bit *= 2

endwhile

% write output

if blkpos[0] == 0

y[blkpos[1],blkpos[2]] = bins[0,blkpos[1],blkpos[2]]

endif

endfunction

P = prevpow2(max_block_size(kernel,[size(a,1),M*N])[0])

parallel_do([P,M,N],a,b,kernel)

endfunction

function [] = main()

im = imread("lena_big.tif")

n = prod(size(im,0..1))

x = reshape(im,[n,3])

tic()

for k=0..99

y1 = transpose(x)*x

endfor

toc() % 3.5 seconds

tic()

for k=0..99

y2 = matrix_multiply(transpose(x),x)

endfor

toc() % 1.3 seconds

print y1

print y2

endfunctionCUDA - Memory manager enhancements I (advanced)

There are some problems operating on large images that do not fit into the GPU memory. The solution is to provide a FAULT-TOLERANT mode, in which the operations are completely performed on the CPU (we assume that the CPU has more memory than the GPU). Of course, running on the CPU comes at a performance hit. Therefore I will add some new configurable settings in this enhancement.

Please note that GPU memory problems can only occur when the total amount of memory used by one single kernel function > (max GPU memory - reserved mem) * (1 - fragmented mem%). For a GPU with 1 GB, this might be around 600 MB. Quasar automatically transfers memory buffers back to the CPU memory when it is running out of GPU space. Nevertheless, this may not be sufficient, as some very large images can take all the space of the GPU memory (for example 3D datasets).

Therefore, three configurable settings are added to the runtime system (Quasar.Redshift.config.xml):

RUNTIME_GPU_MEMORYMODELwith possible values:

- SmallFootPrint - A small memory footprint - opts for conservative memory allocation leaving a lot of GPU memory available for other programs in the system

- MediumFootprint (*) - A medium memory footprint - the default mode

- LargeFootprint - chooses aggressive memory allocation, consuming most of the available GPU memory quickly, and leaving no memory available for other applications. This option is recommended for GPU memory intensive applications.

RUNTIME_GPU_SCHEDULINGMODEwith possible values:

- MaximizePerformance - Attempts to perform as many operations as possible on the GPU (potentially leading to memory failure if there is not sufficient memory available. Recommended for systems with a lot of GPU memory).

- MaximizeStability (*) - Performs operations on the CPU if there is not GPU memory available. For example, processing 512 MB images when the GPU only has 1 GB memory available. The resulting program may be slower. (FAULT-TOLERANT mode)

RUNTIME_GPU_RESERVEDMEM

- The amount of GPU memory reserved for the system (in MB) and/or other processes. The Quasar runtime system will not use the reserved memory (so that other desktop programs can still run correctly). Default value = 160 MB. This value can be decreased at the user’s risk to obtain more GPU memory for processing (desktop applications such as Firefox may complain…)

Please note that the “imshow” function also makes use of the reserved system GPU memory (the CUDA data is copied to an OpenGL texture).

(*) default

CUDA Error handling

For the Quasar run-time, the CUDA error handling mechanism is quite tricky, in the sense that when an error occurs, the GPU device often has to be reinitialized. Consequently, at this time, all data stored in the GPU memory is lost.

The following article gives a more detailed overview of CUDA’s error handling mechanism. http://www.drdobbs.com/parallel/cuda-supercomputing-for-the-masses-part/207603131.

Some considerations:

- “A human-readable description of the error can be obtained from”:

char *cudaGetErrorString(cudaError_t code);In practice, this function will return useless “Unknown error”, “Invalid context”, “Kernel execution failed”

“CUDA also provides a method, cudaGetLastError, which reports the last error for any previous runtime call in the host thread. This has multiple implications: the asynchronous nature of the kernel launches precludes explicitly checking for errors with cudaGetLastError.” => This simply means that error handling does not work in asynchronous mode (concurrent kernel execution).

“When multiple errors occur between calls to cudaGetLastError, only the last error will be reported. This means the programmer must take care to tie the error to the runtime call that generated the error or risk making an incorrect error report to users” => There is no way to find if any other error is raised previously, or which component caused which error.

Practically, when CUDA returns an error, the Quasar runtime attempts to guess what is going on. This can be particularly daunting, especially when concurrent kernel execution is enabled (the flame icon in Redshift).

However, since CUDA 5.5, a stream callback mechanism was introduced and since recently, Quasar is able to use this mechanism for concurrent kernels, even when multiple kernels are launched in sequence. This allows correct handling of errors raised in kernel function, such as:

- matrix out of boundary access (

'checkedmode) - a call to the function

error - an assertion failure

assert(false) - an integer overflow

- NaN or Inf (when checking enabled)

- out of shared memory

- out of dynamic memory

- CUDA unknown error

Users working with CUDA 4.2 (or earlier) should either 1) turn off the concurrent kernel execution or 2) upgrade to CUDA 5.5.

Quasar - slow shared memory, why?

Sometimes, when you use shared memory in Quasar programs, you may notice that using shared memory is significantly slower than directly accessing the global memory, which is very counterintuitive.

The reason is simple: by default, Quasar performs boundary checks at runtime, even inside the kernel functions. This is to ensure that all your kernels are programmed correctly and to catch otherwise undetectable errors very early.

Instead of using the default access:

xr = shared(M,N)it may be useful to disable the boundary checks for the variable:

xr : mat'unchecked = shared(M,N)Note that boundary checking is an option that can be enabled/disabled in the program settings dialog box, or alternatively by editing Quasar.Redshift.config.xml:

<setting name="COMPILER_OUTOFBOUNDS_CHECKS">

<value>False</value>

</setting>Finally, see also this trick that allows you to further optimize kernel functions that use shared memory. # CUDA: Specifying shared memory bounds

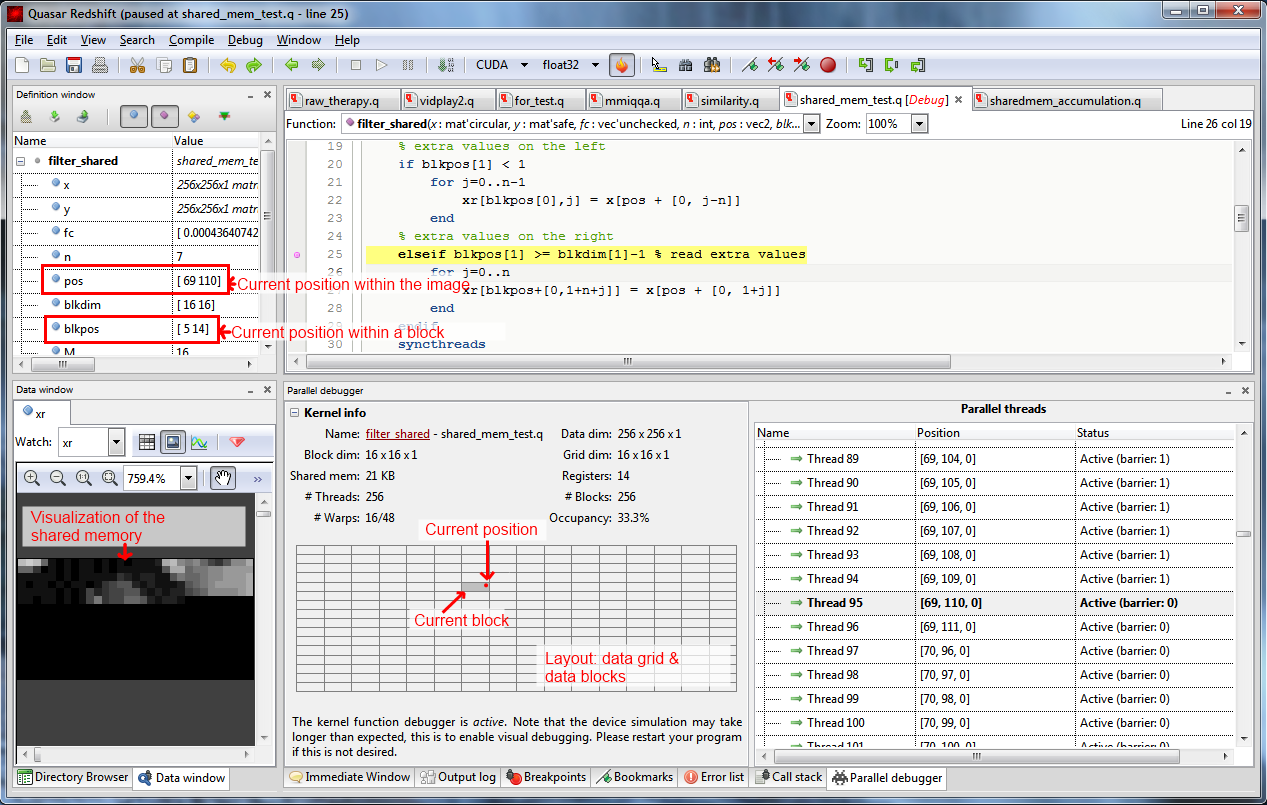

In this Section, a technique is described to use the shared memory of the GPU in a more optimal way, through specification of the amount of memory that a kernel function will actually use.

The maximum amount of shared memory that Quasar kernel functions can currently use is 32K (32768 bytes). Actually, the maximum amount of shared memory of the device is 48K (16K is reserved for internal purposes). The GPU may process several blocks at the same time, however there is one important restriction:

The total number of blocks that can be processed at the same time also depends on the amount of shared memory that is used by each block.

For example, if one block uses 32K, then it is not possible to launch a second block at the same time, because 2 x 32K > 48K. In practice, your kernel function may only use e.g. 4K instead of 32K. This would then allow 48K/4K = 12 blocks to be processed at the same time.

Originally, the Quasar compiler either reserved 0K or 32K shared memory per block, depending on whether the kernel function allocated shared memory. Shared memory is dynamically allocated from within the kernel function. This actually deteriorates the performance, because N*32K < 48K requires N=1. So there is only one block that can be launched simultaneously.

In the latest version, the compiler is able to infer the total amount of shared memory that is being used through the proportional logic system. For example, if you request:

x = shared(20,3,6)the compiler will reserve 20 x 3 x 6 x 4 bytes = 1440 bytes for the kernel function. Often the arguments of the function shared are non-constant. In this case you can use assertions.

assert(M<8 && N<20 && K<4)

x = shared(M,N,K)Due to the above assertion, the compiler is able to infer the amount of required shared memory. In this case: 8 x 20 x 4 x 4 bytes = 2560 bytes. The compiler then gives the following message:

Information: shared_mem_test.q - line 17: Calculated an upper bound for

the amount of shared memory: 2560 bytesThe assertion also allows the runtime system to check whether not too much shared memory will be allocated. In case N would exceed 20, the runtime system will give an error message.

Note: the compiler does not recognize yet all possible assertions that restrict the amount of shared memory. For example assert(numel(blkdim)<=1024); x = shared(blkdim) will not work yet. In the future, more use cases like this will be accepted.

Conclusion:

When implementing a kernel function that uses shared memory, it is recommended to give hints to the compiler about the amount of shared memory that the function will actually use. This can be done using assertions.

The best is to keep the amount of memory used by a kernel function as low as possible. This allows multiple blocks to be processed in parallel. # Assert the Truth, Unassert the Untruth

Quasar has a logic system, that is centered around the assert function and that can be useful for several reasons:

Assertions can be used for testing a specified condition, resulting in a runtime error (

error) if the condition is not met:assert(positiveNumber>0,"positiveNumber became negative while it shouldn't")Assertions can also help the optimization system. For example, the type of variables can be “asserted” using type assertions:

assert(type(cubicle, "cube[cube]"))The compiler can then verify the type of the variable

cubicleand if it is not known at this stage, knowledge can be inserted into the compiler, resulting in the compilation message:assert.q - Line 4: [info] 'cubicle' is now assumed to be of type 'cube[cube]'.At runtime, the assert function just behaves like usual, resulting in an error if the condition is not met.

Assertions are useful in combination with reduction-where clauses:

reduction (x : scalar) -> abs(x) = x where x >= 0If we previously assert that

xis a positive number, then this assertion will eliminate the runtime check forx >= 0.Assertions can be used to cut branches:

assert(x > 0 && x < 1) if x < 0 ... endifHere, the compiler will determine that the

if-block will never be executed, so it will destroy the entire content of theif-block, resulting in a compilation message:assert.q - Line 10: [info] if-branch is cut due to the assertions `x > 0 && x < 1`.Similarly, pre-processor branches can be constructed with this approach.

Assertions can be combined with generic function specialization. Later more about this.

It is not possible to fool the compiler. For example, if the compiler can determine at compile-time that the assertion will never be met, an error will be generated, and it will not be even possible to run the program.

Logic system

The Quasar compiler has now a propositional logic system, that is able to “reason” about previous assertions. Also, different assertions can be combined using the logical operators AND &&, OR || and NOT !.

There are three meta functions that help with assertions:

$check(proposition)checks whetherpropositioncan be satisfied, given the previous set of assertions, resulting in three possible values:"Valid","Satisfiable"or"Unsatisfiable".$assump(variable)lists all assertions that are currently known about a variable, including the implicit type predicates that are obtained through type inference. Note that the result of$assumpis an expression, so for visualization it may be necessary to convert it to a textual representation using$str(.)(to avoid the expression from being evaluated).$simplify(expr)simplifies logic expressions based on the knowledge that is inserted through assertions.

Types of assertions

There are different types of assertions that can be combined in a transparent way.

Equalities

The most simple cases of assertions are the equality assertions a==b. For example:

symbolic a, b

assert(a==4 && b==6)

assert($check(a==5)=="Unsatisfiable")

assert($check(a==4)=="Valid")

assert($check(a!=4)=="Unsatisfiable")

assert($check(b==6)=="Valid")

assert($check(b==3)=="Unsatisfiable")

assert($check(b!=6)=="Unsatisfiable")

assert($check(a==4 && b==6)=="Valid")

assert($check(a==4 && b==5)=="Unsatisfiable")

assert($check(a==4 && b!=6)=="Unsatisfiable")

assert($check(a==4 || b==6)=="Valid")

assert($check(a==4 || b==7)=="Valid")

assert($check(a==3 || b==6)=="Valid")

assert($check(a==3 || b==5)=="Unsatisfiable")

assert($check(a!=4 || b==6)=="Valid")

print $str($assump(a)),",",$str($assump(b)) % prints (a==4),(b==6)Here, we use symbolic to declare symbolic variables (variables that are not to be “evaluated”, i.e. translated into their actual value since they don’t have a specific value). Next, the function assert is used to test whether the $check(.) function works correctly (=self-checking).

Inequalities

The propositional logic system can also work with inequalities:

symbolic a

assert(a>2 && a<4)

assert($check(a>1)=="Valid")

assert($check(a>3)=="Satisfiable")

assert($check(a<3)=="Satisfiable")

assert($check(a<2)=="Unsatisfiable")

assert($check(a>4)=="Unsatisfiable")

assert($check(a<=2)=="Unsatisfiable")

assert($check(a>=2)=="Valid")

assert($check(a<=3)=="Satisfiable")

assert($check(!(a>3))=="Satisfiable")Type assertions

As in the above example:

assert(type(cubicle, "cube[cube]"))Please note that assertions should not be used with the intention of variable type declaration. To declare the type of certain variables there is a more straightforward approach:

cubicle : cube[cube]Type assertions can be used in functions that accept generic ?? arguments, then for example to ensure that a cube[cube] is passed depending on another parameter.

User-defined properties of variables

It is also possible to define “properties” of variables, using a symbolic declaration. For example:

symbolic is_a_hero, Jan_AeltermanThen you can assert:

assert(is_a_hero(Jan_Aelterman))Correspondingly, if you perform the test:

print $check(is_a_hero(Jan_Aelterman)) % Prints: Valid

print $check(!is_a_hero(Jan_Aelterman)) % Prints: UnsatisfiableIf you then try to assert the opposite:

assert(!is_a_hero(Jan_Aelterman))The compiler will complain:

assert.q - Line 119: NO NO NO I don't believe this, can't be true!

Assertion '!(is_a_hero(Jan_Aelterman))' is contradictory with 'is_a_hero(Jan_Aelterman)'Unassert

In some cases, it is neccesary to undo certain assertions that were previously made. For this task, the function unassert can be used:

unassert(propositions)This function only has a meaning at compile-time; at run-time nothing needs to be done.

For example, if you wish to reconsider the assertion is_a_hero(Jan_Aelterman) you can write:

unassert(is_a_hero(Jan_Aelterman))

print $check(is_a_hero(Jan_Aelterman)) % Prints: most likely not

print $check(!is_a_hero(Jan_Aelterman)) % Prints: very likelyAlternatively you could have written:

unassert(!is_a_hero(Jan_Aelterman))

print $check(is_a_hero(Jan_Aelterman)) % Prints: Valid

print $check(!is_a_hero(Jan_Aelterman)) % Prints: UnsatisfiableReductions with where-clauses

Recently, reduction where-clauses have been implemented. The where clause is a condition that determines at runtime (or at compile time) whether a given reduction may be applied. There are two main use cases for where clauses:

- To avoid invalid results: In some circumstances, applying certain reductions may lead to invalid results (for example a real-valued sqrt function applied to a complex-valued input, derivative of tan(x) in pi/2…)

- For optimization purposes.

For example:

reduction (x : scalar) -> abs(x) = x where x >= 0

reduction (x : scalar) -> abs(x) = -x where x < 0In case the compiler has no information on the sign of x, the following mapping is applied:

abs(x) -> x >= 0 ? x : (x < 0 ? -x : abs(x))And the evaluation of the where clauses of the reduction is performed at runtime. However, when the compiler has information on x (e.g. assert(x <= -1)), the mapping will be much simpler:

abs(x) -> -xNote that the abs(.) function is a trivial example, in practice this could be more complicated:

reduction (x : scalar) -> some_op(x, a) = superfast_op(x, a) where 0 <= a && a < 1

reduction (x : scalar) -> some_op(x, a) = accurate_op(x, a) where 1 <= aMeta (or macro) functions

Note: this section gives more advanced info about how internal routines of the compiler can be accessed from user code.

Quasar has a special set of built-in functions, that are aimed at manipulating expressions at compile-time (although in the future the implementation may also allow them to be used at run-time). The functions are special, because actually, they do not follow the regular evaluation order (i.e. they can be evaluated from the outside to the inside of the expression, depending on the context). To make the difference clear with the regular functions, these functions start with prefix $.

For example, x is an expression, as well as x+2 or (x+y)*(3*x)^99. A string can be converted (at runtime) to an expression using the function eval. This is useful for runtime processing of expressions for example entered by the user. However, the opposite is also possible:

print $str((x+y)*(3*x)^99)

% Prints "(x+y)*(3*x)^99"This is similar to the string-izer macro symbol in C:

#define str(x) #xHowever, there are a lot of other things that can be done using meta functions. For example, an expression can be evaluated at compile-time using the function $eval (which differs from eval)

print $eval(log(pi/2))

% Prints 0.45158273311699, but the result is computed at compile-time.The $eval function also works when there are constant variables being referred (i.e. variables whose values are known at compile-time).

Although this seems quite trivial, this technique opens new doors for compile-time manipulation of expressions that are completely different from C/C++ but somewhat similar to Maple or LISP macros).

Here is a small summary of the new meta functions in Quasar:

$eval(.): compile-time evaluation of expressions$str(.): conversion of an expression to string$subs(a=b,.): substitution of a variable by another variable or expression$check(.): checks the satisfiability of a given condition (the result is either valid, satisfiable or unsatisfiable), based on the information that the compiler has at this point.$assump(.): returns an expression with the assertions of a given variable$simplify(.): simplifies boolean expressions (based on the information of the compiler, for example constant values etc.)$args[in](.): returns an expression with the input arguments of a given function.$args[out](.): returns an expression with the input arguments of a given function.$nops(.): returns the number of operands in the expression$op(.,n): returns the n-th operand of the expression$ubound(.): calculates an upper bound for the given expression$specialize(func,.): performs function specialization$inline(lambda(...)): performs inlining of lambda expressions$ftype(x)$withx="__host__"/"__device__"/"__kernel__": determines whether we are inside a host, device or kernel function.

Notes:

Most of these functions (e.g.

$eval,$check,$specializeand$inline) are only provided for testing and should not be used from user-code.The function

$ftypeis useful in combination with conditional reductions, to express that the reduction may only be applied in a device/kernel or host function (also see functions). For example:reduction x -> log(x) = x - 1 where abs(x - 1) < 1e-1 && $ftype("__device__")means that the reduction for

log(x)may only be applied inside__device__functions, when the conditionabs(x - 1) < 1e-1is met. Here, this is simply a linear approximation of the logarithm aroundx==1.

Examples

1. Copying type and assumptions from one variable to another

It is possible to write statements such as “assume the same about variable ‘a’ as what is assumed on ‘b’”. This includes the type of the variable (as in Quasar, the type specification is nothing more than a predicate).

a : int

assert(0 <= a && a < 1)

b : ??

assert($subs(a=b,$assump(a)))

print $str($assump(b))

% Prints "type(b,"int")) && 0 <= b && b < 1"Pre-processor branches

Sometimes there is a need to write code that is compiled conditionally, based on some input parameters. For example in C, for a conversion from floating point to integer:

#define TARGET_PLATFORM_X86

#if TARGET_PLATFORM_X86

int __declspec(naked) ftoi(float a)

{ _asm {

movss xmm0, [esp+4]

cvtss2si eax, xmm0

ret

}}

#else

int ftoi(float a)

{

return (int) a;

}